Aug 12

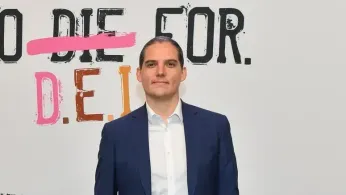

Meta Appoints Anti-DEI Figure Robby Starbuck as AI Bias Advisor After Lawsuit Settlement

READ TIME: 3 MIN.

Meta has appointed conservative activist Robby Starbuck as an AI bias advisor, formalizing a consulting role as part of a settlement that resolved his defamation lawsuit against the company over alleged false statements generated by Meta’s AI chatbot. In a joint statement reported by Fox Business, Meta and Starbuck said they would collaborate “to address issues of ideological and political bias and minimize the risk that the model returns hallucinations,” with Starbuck emphasizing his intent to be “a voice for conservatives.”

The appointment follows Starbuck’s complaint that Meta’s AI incorrectly associated him with the January 6 Capitol riot and conspiracy movements, an incident he said caused reputational harm. Coverage of the settlement indicates that his advisory remit focuses on reducing “ideological and political bias” and improving factual accuracy in Meta’s AI outputs.

The Advocate characterized Starbuck as an anti-DEI and anti-LGBTQ+ conspiracy theorist and reported that the appointment’s stated goal includes making Meta’s chatbot less “woke.” The outlet’s report situates the move within ongoing political debates over content moderation and perceived ideological tilt in AI systems.

Fox Business further reported that Meta has recently rolled back internal Diversity, Equity, and Inclusion policies, aligning with wider pressure to address alleged anti-conservative bias. The outlet also noted that Meta framed the Starbuck collaboration as part of broader work to improve AI accuracy and reduce harmful “hallucinations.”

Independent coverage summarized the settlement by stating Starbuck would advise Meta on ideological and political bias in its AI systems, describing the move as a response to concerns about fairness and neutrality in AI. These reports also referenced increased scrutiny on AI “wokeness” amid shifting U.S. political priorities in 2025.

For LGBTQ+ communities, the appointment raises concerns about how Meta will define and detect “bias” in AI, and whether efforts to reduce alleged ideological skew could deprioritize protections for marginalized users. LGBTQ+ advocates have historically emphasized the need for AI systems to detect and mitigate harassment, misgendering, hateful or discriminatory content, and false narratives that target transgender people and broader LGBTQ+ communities—areas where content moderation and safety tooling intersect with fairness frameworks.

The Advocate’s report underscores that Starbuck has publicly opposed corporate DEI programs and amplified claims aligned with anti-LGBTQ+ rhetoric, positioning his selection as significant for LGBTQ+ stakeholders who rely on robust safety and inclusion standards on major platforms. The framing of AI “wokeness” as a problem, LGBTQ+ advocates caution, can be used to justify weakening initiatives designed to counter discrimination, misinformation, and targeted abuse.

Fox Business quoted Starbuck stating he aims to ensure conservatives are treated fairly by AI, highlighting the competing priorities Meta must balance: addressing political viewpoint concerns while maintaining safeguards that protect users against harmful and defamatory outputs. This balance is particularly consequential for LGBTQ+ users who face outsized levels of online harassment and misinformation and depend on platform policies that enforce anti-hate and anti-bullying standards.

Meta has not, in the sources cited, detailed specific changes it will make to AI training data, guardrails, or evaluation benchmarks as a result of Starbuck’s advisory role. The company’s joint statement with Starbuck referenced “tremendous strides” in improving accuracy and mitigating ideological bias but did not enumerate technical measures, timeframes, or governance structures for oversight. Absent those details, it remains unclear how Meta will weigh protections for targeted communities alongside goals to reduce alleged political bias, a tension that watchdog groups and LGBTQ+ organizations are likely to monitor closely.

Several outlets contextualize the appointment within a broader shift at Meta around DEI and governance. Fox Business reported that Meta ended its corporate DEI policies earlier this year, a move paired with leadership changes and ongoing debates over perceived political bias in technology platforms. This context may intensify scrutiny from civil society groups who argue that dismantling DEI structures can affect representation on teams that build and evaluate AI systems, including those responsible for safety modules that help protect LGBTQ+ people and other marginalized users.

Advocacy groups and researchers will likely call for transparent reporting on any AI policy changes, independent audits, and community consultation, including with LGBTQ+ organizations, to ensure that efforts to reduce ideological bias do not compromise anti-hate protections. As of publication, none of the cited sources report new audit commitments or community consultation frameworks tied specifically to Starbuck’s role.